Hands-on review: Nvidia GeForce RTX 2080 Ti FE

For the last few months FutureFive's Darren Price has been tinkering with a couple of Nvidia's top-of-the-range GeForce RTX 2080 Ti Founders Edition graphics cards.

It's sometimes difficult, with technology featuring what is such clearly unprecedented potential, to produce a fair, real-world-use review without sounding like a paid shill. Benchmark results are fine only to a point, and the same can be said for tech demos. The lack of games taking advantage of the GeForce RTX 2080 Ti makes reviewing the card rather challenging.

So, whilst other sites where tripping over themselves to be first on the block with their breathy, fawning review of the RTX 2080 Ti, my response to Nvidia was that I can't in all clear conscience review a GPU that I don't have anything to run on it.

When Nvidia launched their RTX 20-series graphics cards in September 2018, we all gasped. Twice the cost of the GTX 10-series GPUs it was assumed to be replacing, was a bit harsh. Adding insult to injury, gamers with the flagship RTX 2080 Ti were only getting about 40% more power than a GTX 1080 Ti with their existing games collection. In my test I got a better 3D mark with 2x GeForce GTX 1080 Ti GPUs that I did with one RTX 2080 Ti.

All I could tell you (and I did in my preview, here) was that you should expect around 40-50% increase in performance over the GTX 1080 Ti. Were the price closer to that of the GTX 1080 Ti at launch, I'd have told you all to go out and buy once. But it wasn't, that price tag is for a load of features that had zero support in any games when the card launched.

As gamers, we know Nvidia as the guys that make our game graphics look good. I've been using their tech ever since I replaced my 3DFX Voodoo2 with an Nvidia Riva TNT card. And I've never looked back, well, apart from that time I bought a GeForce FX 5200 by mistake.

For the last six years, Nvidia have been developing deep learning and artificial intelligence hardware based on their GPU technology. It turns out that, for analysis applications, the way GPUs process data makes them much more suited to the job than the sequential way that CPUs (which are still based on 1970s technology) work.

With Nvidia tech powering everything from facial recognition technology to self-driving cars, some of this AI/deep learning technology has come full circle. It's this AI technology that make Nvidia's RTX Turing GPUs special.

The Turing GPU technology's upgraded CUDA cores give that 40% boost to your DX11/DX12 game library. The addition of RT (raytracing) and Tensor (AI) cores gives the card an almighty boost for software written with the platform in mind. And that's the thing. To get the most out of Nvidia's RTX cards, developers need to have accommodated the new technology in their games. Up until very recently, that was not the case.

Whilst there is a list of games that will support the RTX-exclusive features, most are yet to be released. Of those that already have been released, Shadow of the Tomb Raider and Battlefield V, only Battlefield V supports RTX ray-tracing at the moment. Battlefield V uses the RTX's RT cores to provide real-time raytraced reflections in the game.

Previously, reflections have involved some programming trickery called screen-space reflections. Still a complex process, but with a very real limitation. The reflections created this way will only show objects on the screen. Anything happening off-screen will not be reflected. Now I've pointed it out you are likely going to noticed it all the time.

In Battlefield V, you will see flames reflected in puddles even if you can't see the fire. As humans we use this ability all the time, in games, though we've been conditioned to accept the limitation of graphics technology.

With ray-traced reflections in Battlefield V, you could, for example, see a Nazi reflected in a puddle before he came into view. There's no other way, apart from scripting the effect, for the light from an explosion behind you to illuminate objects in front of you. Battlefield V's ray-traced reflections add so much to the visual fidelity of the game, producing a more realistic, natural environment.

Nvidia's Turing GPUs offer developers a degree of flexibility, allowing them to choose what RTX features they want to employ in their games. For instance, when they finally get around to patching the game, Shadow of the Tomb Raider is going to use the RT cores to provide raytraced shadows.

Probably more important than real-time raytracing is the Tensor cores deep learning super-sampling (DLSS) technology. Square Enix have just patched Final Fantasy XV to enable DLSS, but only for gamers playing in 4K resolution.

DLSS works by employing the GPU's Tensor cores AI processing power to reinterpret images, cleaning them up, based on deep learning data generated by Nvidia's super-computers. DLSS takes the job of anti-aliasing (the removal of the jagged steps around objects) off the GPUs CUDA cores and give it to the Tensor cores, the end result being a fasterrate and better quality image.

This training data is generated by Nvidia on and game-by-game basis. It's not something that can be easily patched in, so don't expect any of your old games to support DLSS any time soon.

Wolfenstein II: The New Colossus takes advantage of another feature of the Turing architecture, Content Adaptive Shading. This technology focuses the RTX card's processing power on the parts of an image that needs it most. The result is a small gain in frames per second for a game that already runs blisteringly fast with an RTX 2080 Ti, but the effect will likely be a lot more dramatic for owners of the newly-announced RTX 2060, as well as the RTX 2070 GPUs.

On the horizon, both 4A Games' Metro Exodus and Bioware's Anthem will be supporting RTX features. Metro will be taking advantage of real-time ray-traced global illumination when it releases next month. Anthem, on the other hand will be utilising Nvidia's DLSS.

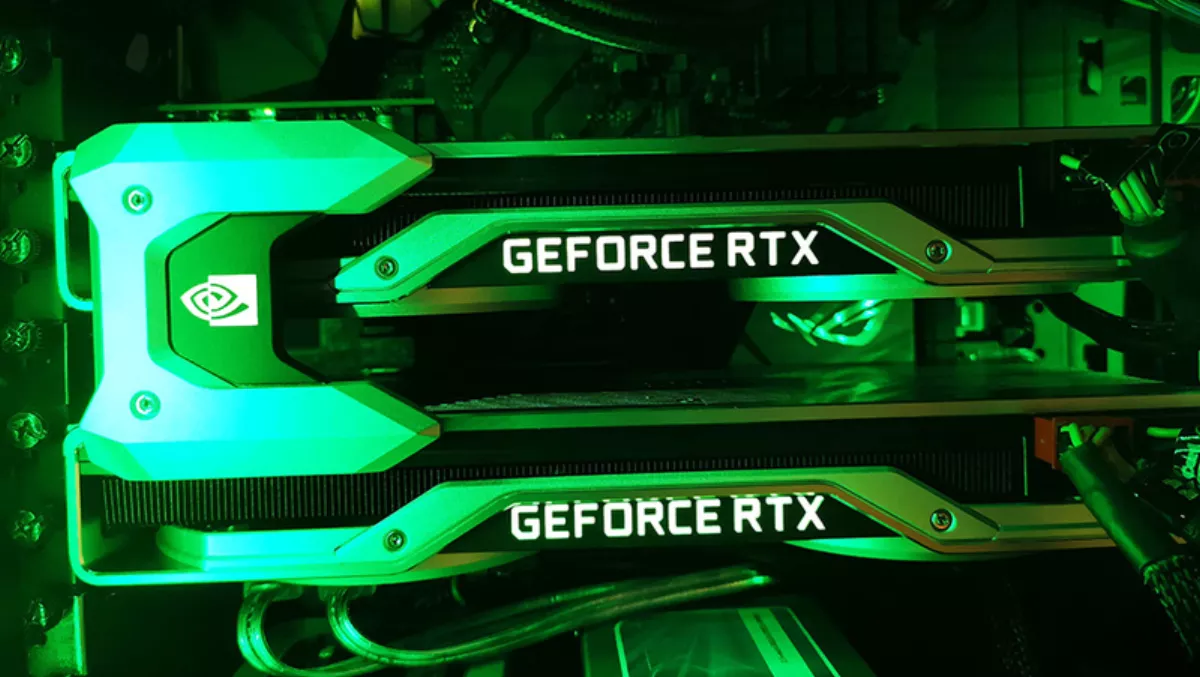

What about SLI- running two RTX 2080Ti GPUs? For two weeks I ran the RTX 2080 Ti without issue, awaiting the arrival of a second 2080Ti and the required NVLink device to couple them together.

The two card set-up didn't go well, the PC not properly recognising the 2nd card. Reinstalling the drivers seem to do the job. Benchmarking using 3DMark was touch and go, with some benchmark tests failing to validate. But, when it did work, the results were rather breathtaking.

3D Mark Time Spy - Core i7 8700K GTX 1080 Ti 2-Way SLI: 3D Mark Score 15351 RTX 2080 Ti: 3D Mark Score 12783 RTX 2080 Ti 2-Way SLI: 3D Mark Score 18207

The excitement was short-lived, though. First off, the two cards didn't play well with the test rig's triple-monitor NVSurround. The NV Control Panel acted up with every other boot. I get the feeling that the RTX-enable drivers still need work and are a little flakier than the previous iteration of GeForce drivers, which have been pretty-much bomb-proof for me.

Installing the second RTX 2080 Ti also seemed to bring some system instability with it. There's nothing worse than a game crashing to the desktop, especially if I've not saved it for ages. Just Cause 4, Assassin's Creed Origins and Assassin's Creed Odyssey all started freezing and crashing. Interestingly, none of these games actually benefit from the multi-GPU set-up.

One of the potential causes of my multi-GPU instability could be the way the RTX 2080 Ti Founders Edition cards are cooled. Rather than a single fan exhausting heated air from the rear of the case, the 2080Ti uses two fans drawing air into heatsink fins perpendicular to the circuit board. The system also includes a unique vapour-chamber design which, in theory, should cool the card more efficiently than Nvidia's previous cooling solution.

Regardless of these apparent efficiencies, the heat from the GPU exhausts into the case and not out of the back of the case, as with the GTX 1080 Ti Founders Edition. This increases the ambient temperature inside, with the hot air from the GPU being expelled via the case/CPU radiator fans.

This may be fine for one card, but two cards with a small air gap between them will not fare so well. This may explain some of the inconsistent 3D Mark results that I was getting: the benchmarks getting worse as the cards got warmer and the temperature throttling kicked in.

Right now, as cool as it is to shovel NZ$4000 of GPUs into your PC case, there not really anything to be gained. Once the tech and the drivers mature a bit, 2xSLI/multi-GPU 2080Ti cards may be worth another look, but not right now.

No recent AAA games use SLI (DX11) or explicit multi-GPU (DX12). Shadow of the Tomb Raider, Battlefield V, Just Cause 4 and Assassin's Creed Odyssey only use one GPU. Some older DX12 games, like Rise of the Tomb Raider do use multi-GPU, but unless you are going 8K, one 2080Ti should have no problem running the game. Older DX11 SLI-capable games, again will hardly tax one 2080Ti.

Lack of support is the big problem with SLI/multi-GPU systems. With DirectX 12, it's up to developers to implement multi-GPU support, it's no longer a simple case of Nvidia adding an SLI profile to the driver. These GTX 2080 Tis burn up to 300W a piece. The test rig has a man-sized 1500W PSU that's more than capable of powering two of these cards, but do I want to be paying the electric bill for kit that I'm not using? Of course, after removing the second card the stability problems all went away.

Battlefield V, is still the only showcase for the Nvidia's real-time raytracing tech. Metro Exodus is coming very soon, but in the meantime, Nvidia supplied me with a copy of the Star Wars Reflections demo. You may have seen a video of the demo running. It depicts two First Order Stormtroopers enduring a few awkward moments in a lift shared with their boss, the chrome-plated Captain Phasma.

Not only does the demo look real, the scene bounces reflections across the walls, the troopers and Captain Phasma's ultra-shiny armour. It looks amazing. Too good to be true. I was only when watching the demo live during a Nvidia presentation that I started to come around and accept that a GeForce RTX2080 Ti was actually running the demo.

Running the Star Wars Reflection demo in the test rig with the GeForce RTX 2080 Ti finally confirmed to me that it was real and not just some Nvidia sleight-of-hand. Switching the 24fps limit off game me an impressive 50fps running the demo at 1440p.

From what I've seen, played and ran on the test PC, in six months' time I think we are going to look back at the RTX as a game changer. Whether it will be up there with the 3DFX Voodoo2 or Nvidia's own GeForce 8800 GTX GPUs, I'm not sure, but we are witnessing a seismic shift in the way games process images.

The CUDA cores give you about a 40% general performance boost over the last iteration of Nvidia GPUs straight off the bat. This will improve performance across all your games library making high-quality 4K gaming finally a reality. VR gamers will also welcome this extra horse-power making there in-game experiences slicker and more realistic.

With DLSS and CAS we are only seeing the start of what the Tensor AI cores can bring to our games. I'm expecting a few surprises as developers get to grips with the technology. It's likely that the technology will also find a use away from games, as Nvidia is already working with Adobe to incorporate AI-based image-manipulation plugins into Photoshop.

The RT cores will have a dramatic effect on the way we play games. Even in Battlefield V, the additional visual feedback that you get from off-screen explosions reflecting back at you adds to both the realism and atmosphere of the game. In time, players will learn to use this extra information to improve their game. Imagine being able to see an approaching opponent reflected in a window, and being able to act upon it. One day, Rainbow Six players may be able to use a mirror to check around a corner. How about proper ray-traced glare from the sniper's scope as they train their barrel in your direction?

Nvidia's RTX 2080 Ti Founders Edition is a glorious piece of kit. It's maybe ahead of its time, and certainly too expensive. But the potential is there. Should you buy one, though? If it fits your budget, yes. If you are reading this to try and justify your future purchase, I say go for it.

GeForce GTX 1080 Ti is still a contender, though. But, if you are getting a new PC and want a bit of future-proofing, without breaking the bank, maybe the RTX 2070 is a better fit, offering all the potential RTX goodies, without the eye-watering price-tag. The newly announced RTX 2060, brings the Turing technology to the great-unwashed, at almost a quarter of the price of the RTX 2080 Ti. But, if you want the most powerful graphics card available, the GeForce RTX 2080 Ti is the card for you.